“DataOps is a collaborative data management practice focused on improving the communication, integration and automation of data flows between data managers and data consumers across an organization. The goal of DataOps is to deliver value faster by creating predictable delivery and change management of data, data models and related artifacts. DataOps uses technology to automate the design, deployment and management of data delivery with appropriate levels of governance, and it uses metadata to improve the usability and value of data in a dynamic environment.“

Source : Gartner

What are the challenges addressed by DataOps?

DataOps is born to answer three main challenges invariably faced by companies launching data initiatives:

Challenge #1 – Cohesion between the teams

The different stakeholders such as IT, Analytics, and Business teams mainly work in silos, and their data and analytics goals on projects may differ. Often, their cultures are different and it is difficult to bring consistency between them.

Challenge #2 – Process efficiency

Most data and analytics projects are handcrafted and rarely deployed in production. They end up being time and budget-consuming, resulting in underdeveloped technologies, and are thus considered a high-risk approach, especially when it comes to homegrown big data solutions.

Challenge #3 – Diversity of technologies

The fragmented and ever-changing Big Data and AI landscape with its multiple open-source frameworks is hard to integrate and maintain, which again leads organizations to consider their Big Data and AI as high-risk investments.

What are the consequences of these issues?

- Value is hard to demonstrate: Only 27% of CxO consider their Big Data projects as valuable.

- Implementation is long: It is estimated that it takes between 12 and 18 months to design and deploy AI pilots.

- Projects are rarely deployed in production: Only 50% of AI projects are actually deployed.

What is the DataOps approach?

DataOps is a new approach to data projects based on two well-known practices: DevOps and Agile.

DevOps

DevOps is a set of practices closing the gap between development and operation teams to make them work and iterate together throughout development projects.

Agile

Agile methodologies involve methods such as continuous delivery, fail fast and test and learn to quickly deploy use cases, driving confidence within teams.

DevOps is commonly associated with Agile promoting short development cycles, many iterations and more frequent deployments. The purpose of this approach is to continuously deliver software, taking into account user feedback to gain more business opportunities.

DataOps shares the same principles applied to data processing to ease and accelerate data analytics deliverability. In practice, it relies on the unique combination of teams, tools and processes to bring agility, orchestration and control throughout projects. A concept being a game changer if properly applied within the organization.

How to implement DataOps?

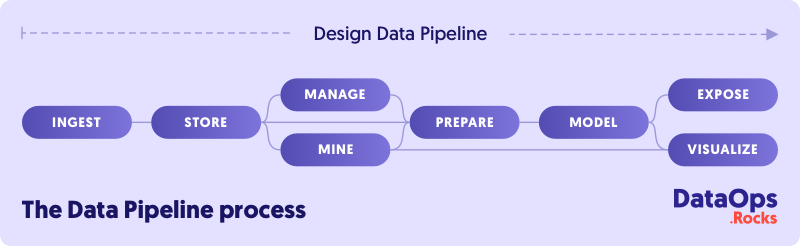

DataOps uses different steps to automate the design, deployment and management of data delivery with the appropriate levels of governance and metadata, in order to improve the use and value of data in a dynamic environment.

At the core of this process sits a data pipeline, refering to the succession of stages data goes through inside a project, starting with its extraction from various data sources and ending with its exposition or visualization for business consumption.

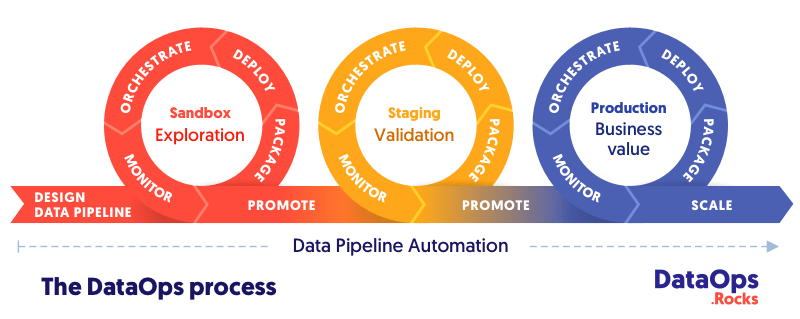

DataOps orchestrates and automates this pipeline to ensure it properly scales to production, by leveraging CI/CD practices. This whole process can be illustrated by the succession of three loops, in which data models get promoted between environments, as new data is added in the pipeline.

Loop #1 – Sandbox

Raw data is explored to have a first set of unrefined analyses. This allows data teams to get creative and probe the organization’s data for any value it could create. Meticulous data cleaning, mapping and modeling are not required yet as the main emphasis is on fast experimentation rather than unquestionable validity.

Loop #2 – Staging

Data is appropriately cleaned, documented and initial models are refined through successive iterations to gradually improve their quality level. Eventually, models are validated when they are judged trustworthy enough to reach production.

Loop #3 – Production

Finally, fully refined analytic models are promoted to the production stage to be used by data consumers in their daily activities. They can leverage gained knowledge to improve and accelerate decision-making processes at the company scale and ultimately generate long-term business value and ROI.

What are the benefits of DataOps?

It allows an alignment between teams to deliver value faster through predictable data delivery.

It improves data quality across the data pipeline to ensure analytics can be trusted.

It encourages reproducibility to further accelerate value creation by eliminating recurring tasks between projects.